The Singularity Is Further Than It Appears

|Are we headed for a Singularity? Is AI a Threat? Not anytime soon. Lack of incentives means very little strong AI work is happening. And even if we did develop one, it’s unlikely to have a hard takeoff.

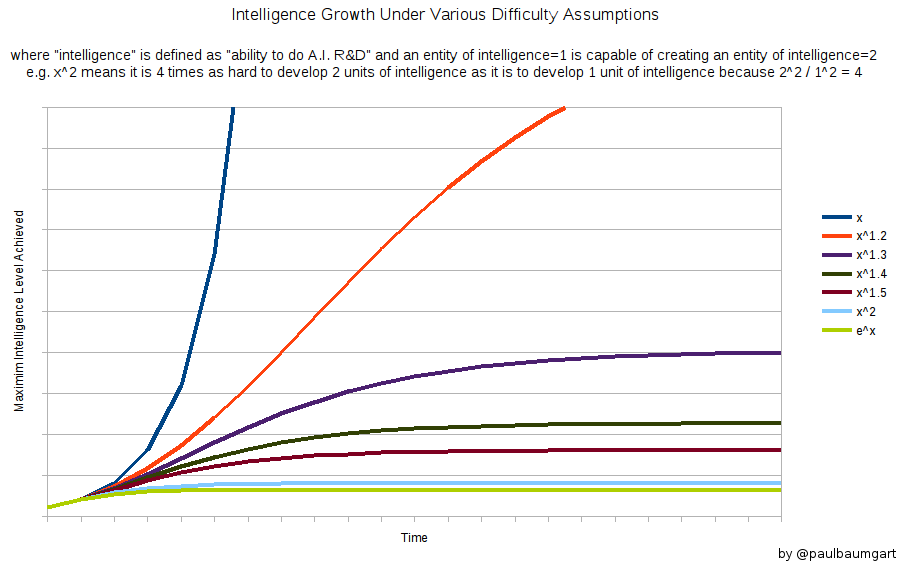

By analogy, if designing intelligence is an N^2 problem, an AI that is 2x as intelligent as the entire team that built it (not just a single human) would be able to design a new AI that is 40% more intelligent than its old self. More importantly, the new AI would only be able to create a new version that is 19% more intelligent. And then less on the next iteration. And next on the one after that, topping out at an overall doubling of its intelligence. Not a takeoff.

There are already entities with vastly greater than human intelligence working on the problem of augmenting their own intelligence. A great many, in fact. We call them corporations. And while we may have a variety of thoughts about them, not one has achieved transcendence.

Sentience brings no advantage to the companies [like Google] who build these software systems. Building it would entail an epic research project – indeed, one of unknown length involving uncapped expenditure for potentially decades – for no obvious outcome. So why would anyone do it?

You can have your AI secretary or AI assistant and have it be all artifice. And frankly, we’ll likely prefer it that way. If we design an AI that truly is sentient, even at slightly less than human intelligence we’ll suddenly be faced with very real ethical issues. Can we turn it off? Would that be murder? Can we experiment on it? Does it deserve privacy? What if it starts asking for privacy? Or freedom? Or the right to vote?