Ubiquitous Text 2 Image

|

“buckskin and fur” by Naomi

From August 1st to 6th 2022 the Harbour Collective organized a workshop for six Indigenous Canadian artists where we investigated the latest machine learning / AI art tools, particularly tools that allow text to be transformed into images (text-2-image). The two main tools used were MidJourney and DALLE-2.

During the week, as the participants grew more familiar with the tools, and particularly after we were able to create our own community online on the Discord platform where image prompts and generated images could be shared just with the group, the usage patterns began to change.

The Discord chat app can be used as the interface for MidJourney for generating images. By using a text chat as the main interface, each prompt and its generated image is automatically recorded and shared with the group. This makes the process very transparent and increases knowledge transfer as participants learn from each others experiments . This led to “conversations” through images and collaborations on prompt experiments and collaborative collages.

“woodland style painting, two buffalo kissing” by Dayna

Image Conversations

It only takes the same amount of time to write a prompt as it does to send a text or chat message, so generated images started to become used in similar situations. For example, artists started having late night conversations through images after going back to their own residences. As a group, we started commenting using images on conversations that were happening at lunch and dinner. Instead of, or in addition to, making a witty quip in conversation, participants could respond with a quickly generated image that they could share by showing their phone or everyone could check their phone’s Discord app and see the newest post.

The generative AI added an often funny and novel perspective to the context of the conversation. A response to something said in conversation might be “I wonder what would that look like?” and after a first test prompt and image the group might have a conversation of how to translate the concept into language the machine could interpret more easily.

“Buffy Sainte Marie styled in Andy Warhol paintings” by Naomi

Exploring New Styles

Artists often ended up exploring styles very different from their usual work. The ease and speed of experimentation let them explore and the “miscommunication” between what they imagined the AI might generate from their prompt and the actual results combined with the close to finished quality of the images (rather than rough sketches) allowed the participants to discover and fall in love with images far outside their comfort zone.

The generative AIs have their own interpretation of language and the representations and associations between language and images are learned from text and images on the internet. Participants discovered there were still missing styles and images, particularly around Indigenous artists, 2-spirit and queer artists. Indigenous images that were represented were from many different areas in the world but with an uneven amount of representation.

Authorship / Ownership

One of the important new subjective experiences artists investigated was their concept of authorship of the images. The machine generated the images, so how much of the results felt as though they came from the artist? A paintbrush is not generally granted any authorship, nor is image editing software or other digital tools, but no part of the image is created by the artist with these tools.

Is the artist the one with the concept or the one executing the concept? Wealthy conceptual artists certainly claim the former, but what about cases like Clancy Gebler Davies vs William Corbett, who both think they are the sole photographer of an image conceived of and modelled by Davies but executed by Corbett. US copyright law has a history of denying copyright to non-humans, and who would be granted copyright if the same text prompts were given by an artist to another human artist they were paying to paint for them? Work for hire usually grants copyright to the paying (conceptual) artist. But if copyright is granted that way then what happens when two people give the machine the same prompt and generate a similar or nearly identical image? It seems fundamentally broken to have a “land rush” copyright model for text-2-image, nor does it make sense that first use a technique with a particular tool grants copyright over that technique. Technique (in this case a particular prompt and particular AI) in general cannot be copyrighted, only the the final expression.

Some artists felt that some amount of intervention or labour on the images themselves was needed to change the generative piece from machine-made to something the participant felt was their art. Participants were generally reluctant to publish a piece that had no intervention.

“wolf soft yellow warm red earthy brown light blue thunderbird walk slowly stands tall he who paints the day” by Jason

A Practice of Honest Curation

Both DALLE and MidJourney generate more than one image for each text prompt, inviting artists to curate which image they like the most from the (usually four) options presented. MidJourney creates for four smaller images that you choose which, if any, to “upscale” into larger more detailed versions.

Text-2-image is surprisingly exhausting work, because of the number of and variety of honest decisions it presents. By honest, I mean that external considerations to the visual representation are reduced – you aren’t worrying about insulting or discouraging someone who has tried their best to create an image. The machine doesn’t have feelings to hurt. This honest curation is excellent practice. The curation is also varied between easy decisions (something that is “obviously terrible”) and very subtle distinctions in theme and quality between images. This practice of curation is very useful to learn one’s own taste, style and aesthetics. We found that shared curation, having multiple artists comment on and pick out their favourite images from the options was a fun and informative way to learn preferences and see subjective quality from your own and other’s perspectives.

Curation wasn’t just in image selection, it was also integrated into choosing prompts. Especially when everyone’s prompts were shared. Successful prompts involved a variety of skills - a mix of detective deduction, trial and error, intuition, and imagining an subjective internal vision, translating that to language, then translating those words into a prompt that you think will evoke the same vision in the machine mind.

Good Looking Experiments

This document is filled with beautiful images, but it is important to note that these are unedited results from the AI image generators. Indeed, these are just experiments, and should be considered sketches. Yet, despite being a sketch they look and feel like finished works. This is something quite novel to artistic practice.

The ease of which documents can now add high quality imagery means both that the quality and clarity of documents can increase but also that laboriously hand-craft visual imagery is no longer required, especially as incidental or supplemental imagery. The implications of which may transform the stock image industry, illustration for hire, and most commercial art. For example it may become popular to give custom printed cards with beautiful imagery that is tailored to the recipient as gifts and businesses and homes may have prints that are unique to that place but in a very popular artists style. Essentially combining two existing profit-driven strategies: what is popular and what is unique.

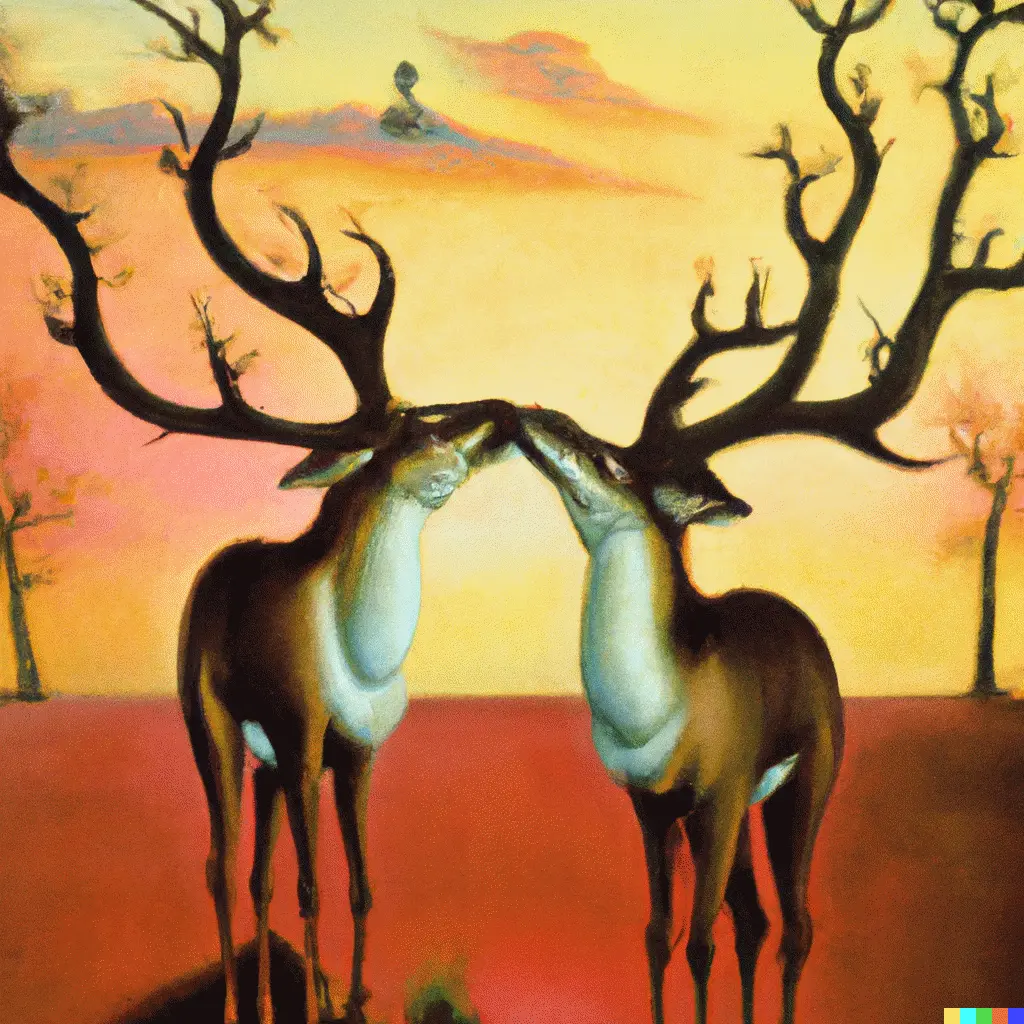

“a surrealist dream-like oil painting by Salvador Dali of two deers kissing” by Dayna

Will we tire of this?

One outstanding question that a weeks experimentation couldn’t answer is whether people will see text-2-image as a fad or something more enduring. Ubiquitous text-2-image technologies could drastically change the media landscape, perhaps similarly to how easily accessible video production on phones has transformed media first on YouTube and then on Tiktok. Will people create more images, perhaps even becoming prolific artists, even if only ever in a style of their favourite traditional artist? Cover bands exist now, but will there be “cover visual artists”?

Perhaps, like many digital tools, their overuse will create a permanent backlash against their usage. Text-2-image as gauche, or “cheating” (not “real” art), or as exploitative of “real” artists could become the dominant perception of these tools.

Thanks

Thanks to all the participants and facilitators for their thoughtful and joyful experimentation and art making. All images included in this document are experimental studies and/or works in progress and do not represent the the artist’s own styles, choices or finished work, instead they were chosen as good examples of relationships between text prompts and images and illustrations of some of the learning that occurred during the workshop.

A Selection of Experiments

“intergenerational trauma” by Naomi

The MidJourney image generator has a particularly painterly default style that it uses when the style is otherwise unspecified in the prompt. It is able to create many variations on a theme, which the artist can then select their favourite(s) and have the AI upscale the favourite image to make it larger and with more detail.

The DALLE-2 image generator was better at photorealistic and pixel art style images, but the evocative painted look of MidJourney often suited the artists preferences.

“Cree uncle wearing a cowboy hat sitting on a 80s vintage sofa smoking a cigarette” by Holly

It required experimentation to find a textual representation that helped to have MidJourney generate images of the Indigenous people imaged by the artist.

The image dataset of MidJourney had a tendency to generate indigenous people local to the southern US.

“Cree uncles and Cree aunties socializing at a 90s low-income house party smoking cigarettes” by Holly

Faces were still problematic in both of the tools experimented with, but the artists used a variety of techniques to work around that or were able to “lean into” the distortions, appreciating the tool’s “glitches” and capabilities as it’s (currently) authentic voice.

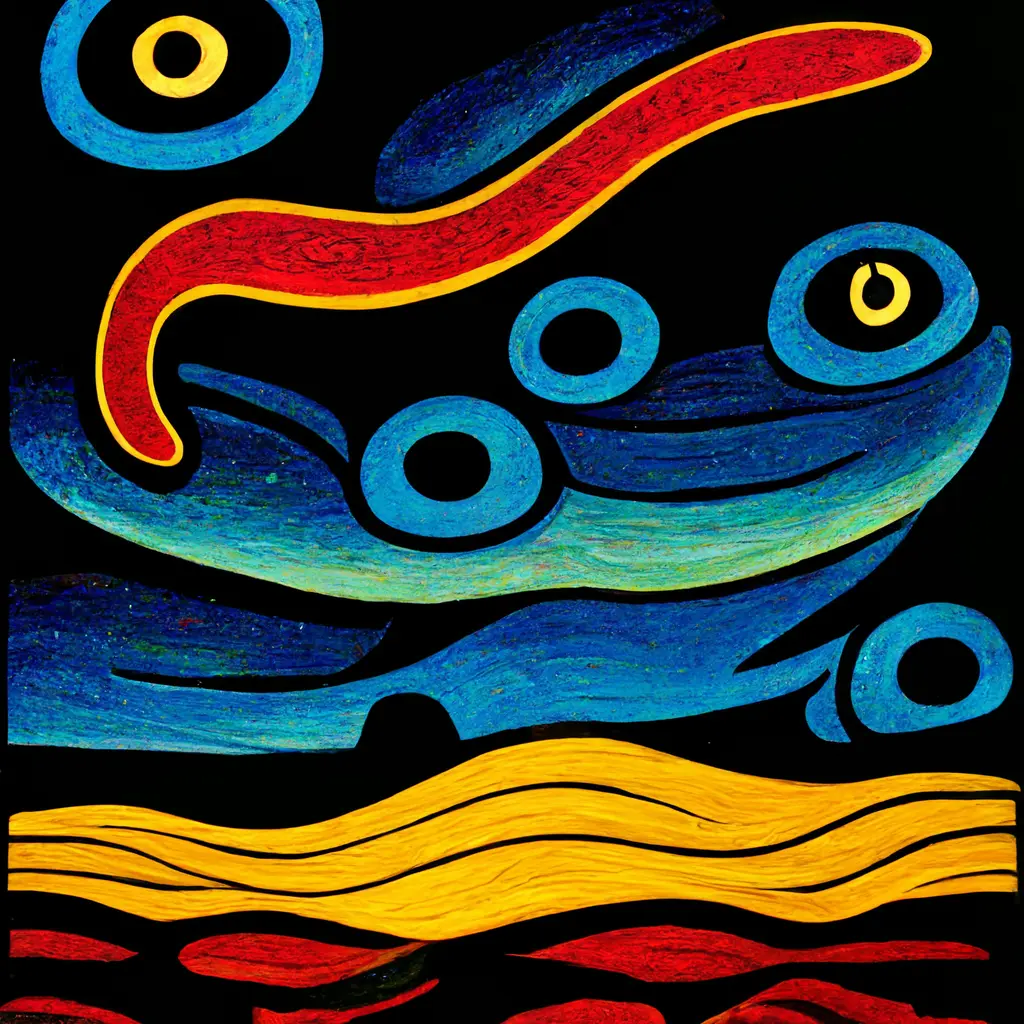

“storms spirits by norval morrisseau” by David

To get away from the default or random styles generated by the generative tools the artists could add additional stylistic information to the prompt. Numerous discussions about ownership, appropriation, consent and remixing culture were sparked by the ease of generating images in particular styles.

“A person with two spirits, Métis sash flying, floating up to a big prairie sky with lots of big fluffy clouds, digital art” by Dayna

Artifacts and quirks of the generation created interesting questions for the artists: should they edit them out to match their vision or leave them in to show the hand of the AI collaborator?

The DALLE-2 allowed for image editing as well, opening up additional options for manipulating the images.

“Art Nouveau image panel of barred puffbird with a pronounced mohawk hairdo in a lush jungle prime real estate in Ecuador dancing and oscillating wildly” by Sasha

The length, order and specificity of the prompt was a major part of the exploration.

“Many bison falling off a cliff in the style of Lisa Frank” by Holly

Combining subjects and themes with style descriptions that are not traditionally associated with those themes provided lots of opportunities.

“native american business suit” by David

Sometimes the generator made very interesting associations without explicit prompting, as in this case, with the carved wooden mannequin that wasn’t specified but was likely associated due to the usage of “native american”. Changing the language of how to describe the Indigeneity made significant differences to the representation.

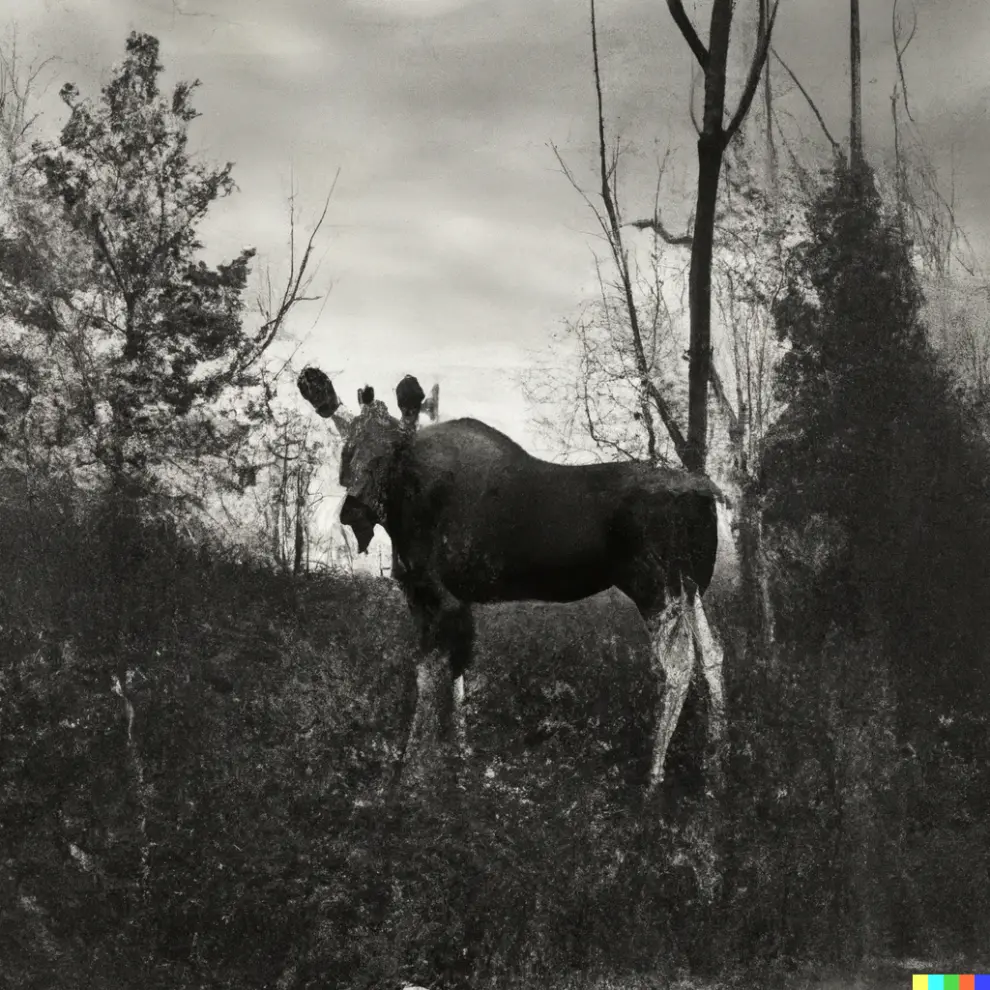

“a gothic photograph of a moose, wide shot, outdoors, cloudy afternoon, shot on a 4x5 camera” by Dayna

The DALLE-2 tool allowed for quite realistic photographic generated images, especially if the prompt contained specifics about the camera or film. Often these “realistic” images still contained “flaws”. These artifacts created a dilemma – cleaning up the images would be a significant amount of work. Less realistic styles on the other hand could be used with less editing.

“5 Cree aunties laughing, disposable camera” by Holly “5 Cree aunties laughing, pixelart” by Holly

Holly’s experiments with variations of “5 Cree aunties laughing”, using a variety of prompts to get different styles of realism, and feelings with spontaneity and engagement with the viewer. The tools had great difficulty counting.

“Pixel art raccoons trash clan” by Dayna

The sharing of text prompts and images among all the participants allowed for the cross-pollination of ideas, successful prompt strategies, and styles.

“a plumber fixing a flooded basement” by David

The ease of creating images allowed for life events concurrent to the workshop to be transformed into visual images. Will the emotional processing of difficult situations be affected by the ability to turn them into visual art? Would you post a photo about your flooded basement to your social media, or would it be an aesthetically pleasing generated image or just a text description?

“glowing pool of water in the style of Christi Belcourt” by Holly

Discussions that included references to artists or art history led to quick tests of how those artists might have interpreted different subjects (as reinterpreted by the AI).

“glowing pool of water with several aunties and uncles swimming and relaxing together” by Holly

The ease and speed of experimentation allowed participants to be creating images during presentations and other discussions, integrating concepts and imagery they had just heard or seen into their existing text prompt experiments.

“Speaks to the wind, tree branches swaying, teachings from land :: Norval Morrisseau, Norval Morriseau, Christi Belcourt :: layers of abstraction and transparency, flat soft gradients, flowing paint :: sparkles of light, veins of black —ar 9:16 —no primary colors” by Quinn

MidJourney allows for very sophisticated text prompts that include multiple prompts and “flags” or special commands to the tool that can control some of the parameters like aspect ratio and negative prompts for imagery to avoid.

Text-2-image tools appear simple to use, but actually contain incredible depth and complexity, moving the technical proficiency of image making from practising gesture to learning the parameters of the interface and understanding of the mind of the machine.

Many thanks to Harbour Collective and all the participating artists!